Using your workspace

Getting started, accessing your data files, available software, locking your workspace and signing out

Getting started in the DataLab workspace

When you have successfully logged into your Virtual Machine (VM) instance, your DataLab workspace looks like this:

You can use DataLab in a similar way to using other secure networked systems, where you can securely see, use and share data files, analysis and output with the other members of your project team.

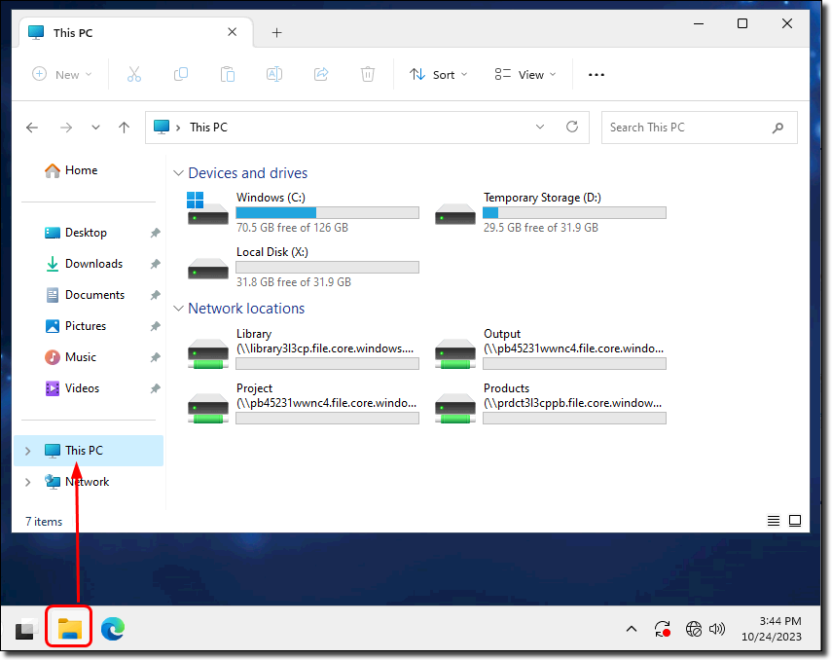

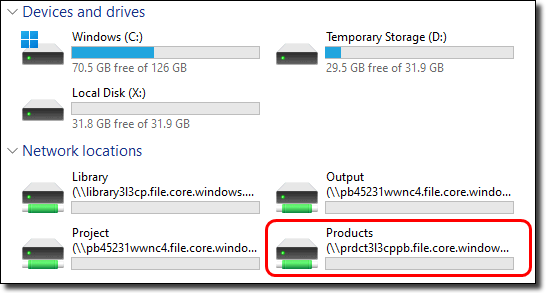

Open File Explorer and click on This PC to see the network drives you have access to:

- Drive C can be utilised to run scripts and create new Python package virtual environments, not facilitated through either Jupyter Notebook, JupyterLab or Spyder. NOTE: Do not use the C drive to save your data or scripts permanently. Though you may be able to temporarily save files there, the drive is destroyed on each 30 day rebuild and any information stored there becomes unrecoverable.

- Library (L drive): All researchers can see all files in the Library drive. This is where we upload support information, such as statistical language documentation, ANZSIC classification and general access guides for non-standard products. Files cannot be saved to this drive.

- Output (O drive): Any output you want the ABS to clear should be saved to this drive. Only members of your team can see this drive. See also Request output clearance. Information is backed up nightly and retained for 14 days. Information in this folder remains unaffected by a rebuild.

- Project (P drive): A shared space for your team to work in and store all your project files, as well as set up and run Python and R scripts. Only members of your team can see this drive. Information is backed up nightly and retained for 14 days. Information in this folder remains unaffected by a rebuild. The default storage is 1TB. You will need to review and delete unnecessary files as your project files grow over time. If necessary, an increase to this storage can be requested via the Contact us page. There may be a cost for additional storage.

- Products (R drive): Access data files that have been approved for your project. However, it is best to use the My data products shortcut on your desktop as this shows you only the datasets you have been approved to access, rather than all dataset short names. Files cannot be saved to this drive.

- Local Disk (X drive): If you have been granted local disk space, this can be used to run jobs on offline virtual machines (desktops). You may want to request this option if you have multiple projects that you are actively involved in. There may be a cost associated with attaching local disk space to your VM. The local disk will only be present if it has been allocated to your VM. To request local disk space contact the ABS via the Contact us page

- Drives A and D are not to be used. Information saved here is either destroyed with each nightly shutdown and 30 day rebuild, or has restricted access. Attempting to read or write from Drives A or D will invoke a group policy error due to access controls. In this case please use the C drive or consult your project lead to request local disk space.

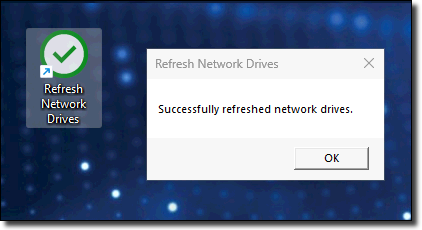

If your network drives do not appear in File Explorer, you can click the 'Refresh Network' shortcut on the desktop. A confirmation message appears when this has been successfully refreshed.

Accessing your data files

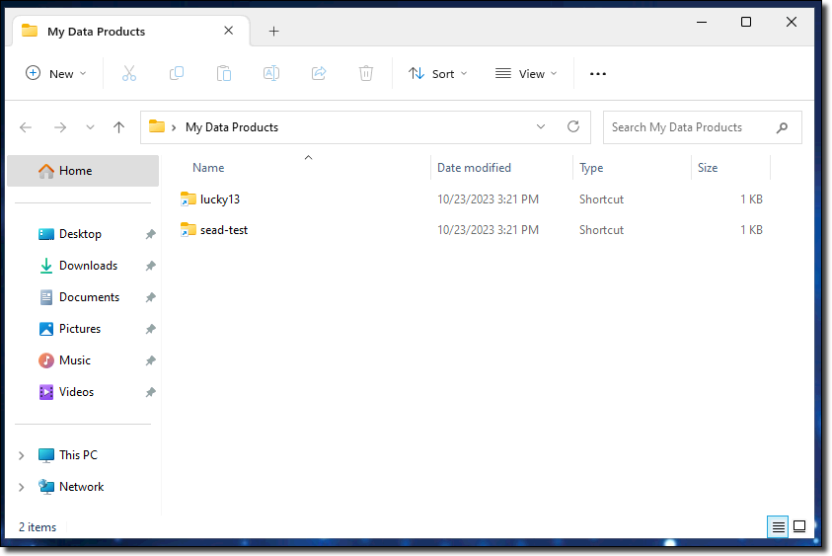

To access the data files for your project, use the 'My Data Products' shortcut on your desktop.

The My Data Products folder displays only the products approved for your project.

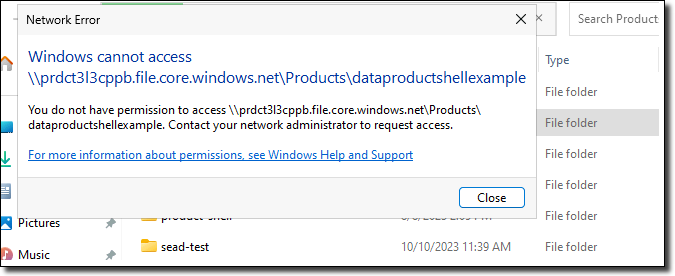

Selecting the 'Products' drive shows you the short name of all data loaded to the DataLab. However, if you try to open a file that is not approved for your project you are denied access and receive an error.

Available software

To access the latest version of each application in the DataLab, refer to the ‘Manage’ option in the portal to transition to the most recent VM version available. VM versions are updated annually, allowing for a suitable transition period for migration.

Software can be opened using the shortcuts on your desktop or by using search on the Taskbar.

All researchers have access to these applications in the DataLab:

| VM Version | |

|---|---|

| 2025 | |

| LibreOffice | 7.6.7.1 |

| Acrobat Reader | |

| Azure Storage Explorer | 1.33.0 |

| Notepad ++ | 8.6.5 |

| QGIS | 3.36 |

| WinMerge | 2.16.40 |

| Git | 2.45.0 |

| Stata | MP18 |

| CUDA | 12.1.1 |

| R. | 4.4.1 |

| Rstudio | 2024.04 |

| Rtools | 44 |

| Python | 3.11 |

| Jupyter Notebook & JupyterLab | |

| Spyder | |

| PostgreSQL | 16 |

| Posit (package manager) *Approved CRAN and PyPI packages are available in DataLab | |

| 7Zip | 23.01 |

If required, you can also request:

| SAS | 9.4 (EG 8.2) |

| Azure Databricks |

Microsoft Word and Excel are not currently available, as these applications require an internet connection, which is not supported in a secure system like DataLab. Libreoffice is the alternative offered in the system, with similar capabilities to Microsoft Office.

Firefox and Edge are available to support access for Databricks and for methods such as Jupyter notebooks in order to use Python/R. These browsers cannot be used to browse the internet.

If you require a specific statistical package not currently available, or have an enquiry regarding software versioning, submit a request using the Contact us page.

Managing your R & Python packages explains how you can manage R and Python packages using the Posit Package Manager shortcut on your desktop.

Databricks

Databricks is available to projects within the DataLab as a non-standard product.

What is Databricks?

Databricks is a cloud-based Big Data processing platform which provides users with an integrated environment to collaborate on projects and offers a range of tools for data exploration, visualisation and analysis. Within the Databricks environment, users can:

- Build pipelines for streaming data processing.

- Build and run machine learning tools.

- Create interactive dashboards.

- Take advantage of scalable distributed computing capability.

Project analysts will also have access to the Databricks Academy training subscription (an online library of Databricks training guides), in addition to instruction materials on how to setup the Databricks workspace provided in the ABS shared library.

NOTE: If you are using Azure Data Lake containers with Databricks, Azure Storage Explorer is available as an alternative to AzCopy to manage and transfer your files between your file share drives (output, project, etc) and blob storage. Refer to the 'Azure Storage Explorer User Guide' in the shared library drive.

NOTE: The project datalake storage does not currently have back up due to implementation restrictions, therefore we highly recommend regularly copying your files from the project datalake storage to your project file share storage (your P:/ drive).

How do I allocate a Databricks workspace to my project?

To allocate a Databricks workspace to your project, you will need to submit a request to info@mydata.abs.gov.au. Once your project is allocated a Databricks workspace, it can be accessed from within your VM using the installed Edge or Firefox browsers.

How will costing work?

Access to Databricks will be per project and charged quarterly based on usage. Projects will have the flexibility to select between a low or high usage profile. Selecting the appropriate usage profile is determined by how much compute resources project analysts are estimated to consume. The same level of service is applicable across both profiles.

As Databricks uses separate compute power, projects requesting access to Databricks should consider if they need to continue to maintain their existing VM sizes. The option of scaling down the size of existing VMs provides users the opportunity to save on project costs.

What are the cluster policy arrangements?

User analysts can be provisioned with the following cluster policy options:

| Instance | Server Purpose | Max Autoscale workers | vCPU(s) | RAM/ | Databricks Units |

|---|---|---|---|---|---|

| DS3 v2 | General Purpose | 5 | 4 | 14GB | 0.75 |

| D13 v2 | Memory optimised | 4 | 8 | 56GB | 2 |

| F16s v2 | Compute optimised | 4 | 16 | 32GB | 3 |

Databricks cluster policies will restrict the type and number of workers you can provision for a cluster. If an existing policy does not fit your requirements, you can request a new policy via the ABS. All information regarding this can be found in the ABS shared library.

To ensure the security and integrity of the DataLab, clients will not have administrative access to the Databricks workspace and some usage restrictions may apply. Administration will be exclusively managed by the ABS, aligning with the specified usage restrictions of the DataLab.

Please contact info@mydata.abs.gov.au with any questions.

Managing your R & Python packages

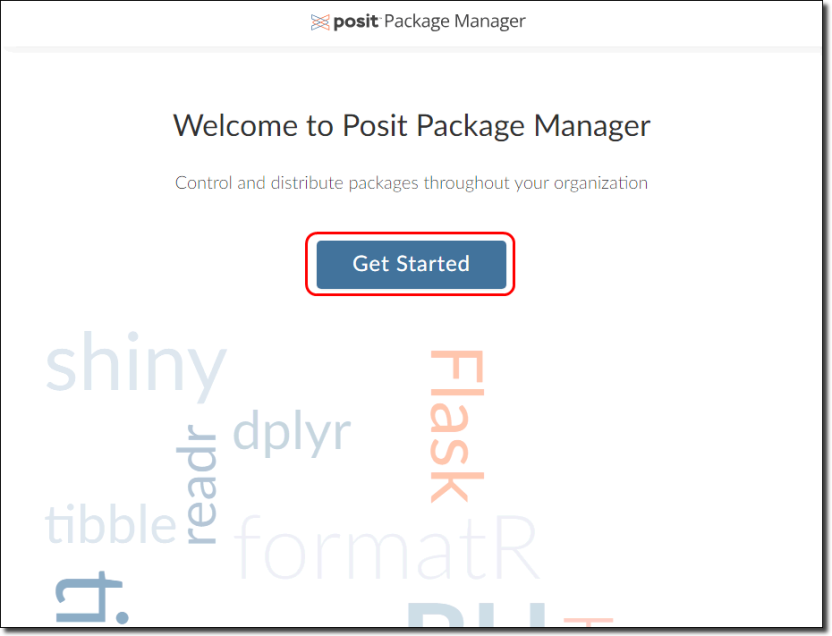

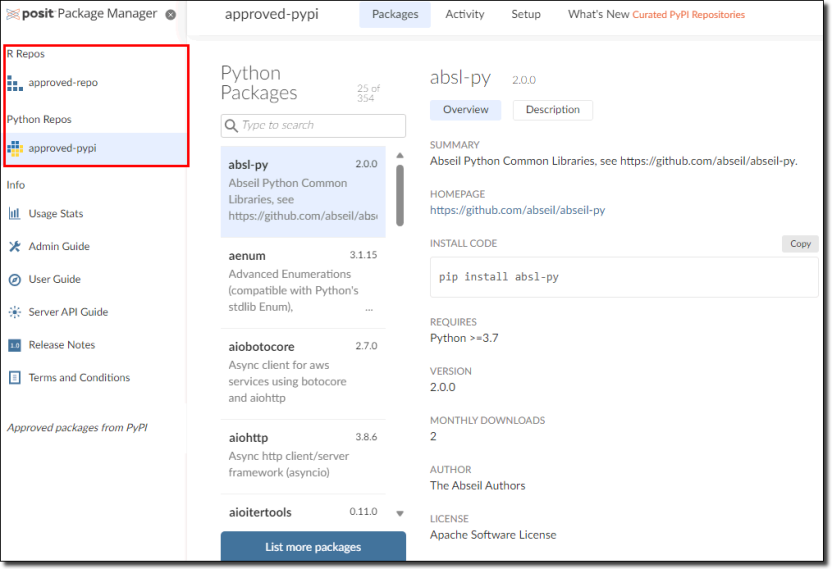

If you are working with a specific set of R and/or Python packages, you can now manage these using the Package Manager shortcut on your desktop.

In the Package Manager, click 'Get Started' to navigate to the available packages. You can use this tool to search for packages (in the left column) and install the packages you want to use for your project. If the packages you need are not listed, you can request them using the Contact us page.

Accessing earlier versions of R and Python Packages from Posit

The following describes how to access different, older, versions of packages from Posit depending on the language. If you require a new package, newer or older version of a package from CRAN for R or PyPi for Python, then please go to Contact us (from the 'Request new Software or Software packages' form) and the package will be made available subject to availability and security checks. Packages not from CRAN or PyPi will not be available through Posit and these will be subject to a separate security screening processing before they are approved for access in the DataLab if the process is successful.

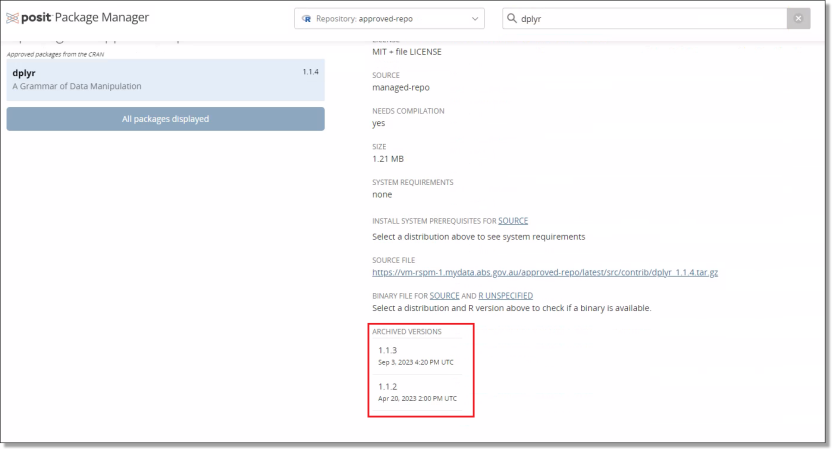

To access different package versions in R

These files can be downloaded from Posit using your chosen R environment through the ‘devtools’ package e.g., ‘devtools::install_version(“your package”, version=“your package version”)’. You will be able to see the available package version for R at the bottom of the Posit page for that package. An example for the ‘dplyr’ package can be seen below.

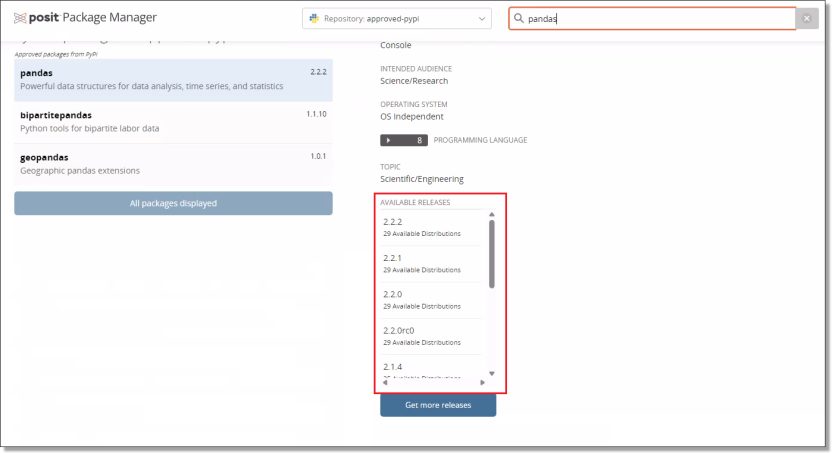

To access different package versions in Python

Accessing older python package versions can be done when installing the package through ‘pip’ e.g., ‘pip install pandas==2.1.0’. The available versions of each of the python packages can also be seen at the bottom of the relevant Posit page. An example for the ‘pandas’ package can be seen below.

Virtual machines

What are virtual machines?

Virtual machines, or VMs, are the virtual workspaces you use to undertake your analysis in the DataLab. VMs are created by the ABS as part of the project establishment process, described in About DataLab.

You have one VM for each project. This is a design feature to prevent data from one project being accessed by another project. You can run analysis on multiple virtual machines at the same time, but only if you have been granted local disk space. See Run jobs on offline VMs (desktops). You may want to request this option if you have multiple projects that you are actively involved in.

Virtual machine sizes

The ABS offers standard and non-standard VM sizes. Standard VMs are included in the DataLab annual fee, whereas non-standard VMs are subject to additional charges as they are more expensive to run. For more information on charges, see DataLab charges.

Researchers may request access to a non-standard machine for performance or productivity purposes. If you require a non-standard machine, you will need to consult your project lead and your project lead will need to send the ABS an updated project proposal.

Currently offered VMs and approximate running costs are listed in the tables below.

Standard virtual machines

Large VMs are provided as the default and most projects operate efficiently with this size.

If you have a small or medium machine, it can be upgraded to a large at no additional charge. Please contact info@mydata.abs.gov.au for further assistance.

| Name | CPU Cores | RAM | Approx cost per hour ($AUD) |

|---|---|---|---|

| Small | 2 | 8GB | Not applicable - these virtual machines are included in the DataLab annual fee. |

| Medium | 2 | 16GB | |

| Large | 8 | 64GB |

Non-standard virtual machines

Non-standard machines are available on request and charged quarterly based on usage.

Note: VMs continue to incur running costs if the VM has not been stopped, even when not in use. The ABS recommends researchers shut down their machine during periods of inactivity to avoid unintended charges. Disconnecting, or closing your machine window, is insufficient. VMs are required to be shut down completely as per the VM Management Options to avoid continued running costs.

If you have any questions or require further assistance, please contact info@mydata.abs.gov.au.

| Name | CPU Cores | RAM | Approx cost per hour ($AUD) |

|---|---|---|---|

| X-Large | 16 | 128GB | $1.80 |

| XX-Large | 32 | 256GB | $3.80 |

| XXX-Large | 64 | 504GB | $6.40 |

Specialised and custom non-standard virtual machines

The following specialised VMs (also non-standard) capable of supporting machine learning and high-performance computing can also be requested, however these are assessed on a case by case basis with the appropriate justification, and are subject to quote. If the required VM is not listed, the ABS may be able to provide a customised option at an additional charge, please be sure to describe why the available machines do not meet your needs in any justification provided. A list of virtual machines by region can be viewed via the Azure website.

Assigned names of VMs are unrelated to Azure naming conventions. ABS review our provided VM options periodically, please revisit this page for any updates.

| Name | CPU Cores | RAM | GPU | Approx per hour cost ($AUD) |

|---|---|---|---|---|

| Large GPU | 8 | 56GB | Tesla T4 16GB | $1.50 |

| X-Large GPU | 16 | 110GB | Tesla T4 16GB | $2.40 |

Sign out or disconnect from your DataLab session

If you need to close your DataLab session but want to keep your analysis running, you can sign out or disconnect from the VM. Your programs will keep running until 10pm if you’ve turned on the ‘Bypass’ option, the shutdown will occur at a later date at the same time.

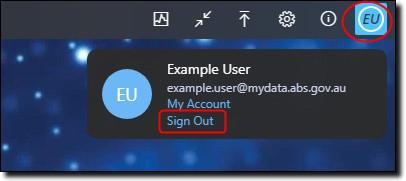

To sign out of your workspace, click on the button at the top right of your window that shows your initials, then select ‘Sign Out’.

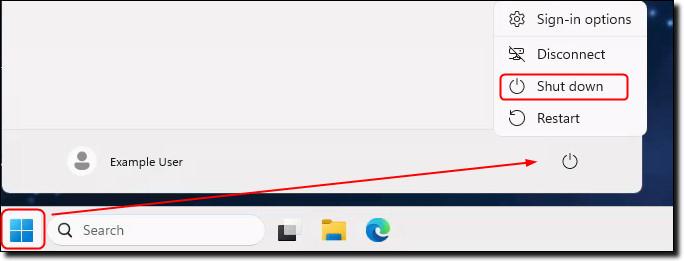

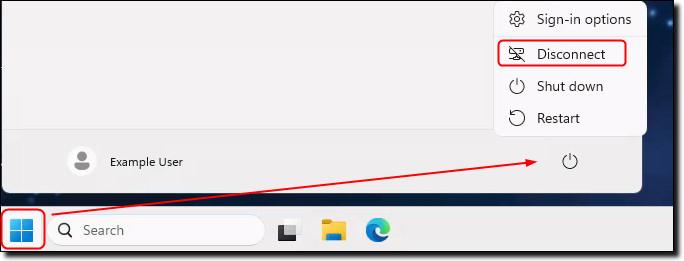

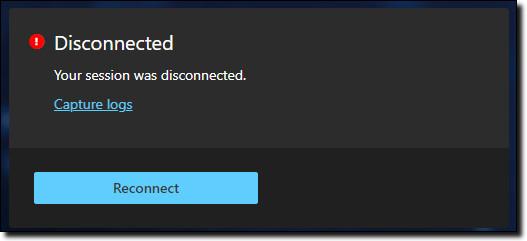

To disconnect from your session, first click on the Start menu and then select the power symbol. An option to ‘Disconnect’ will appear. Selecting this will immediately disconnect you from the session.

Please note that the session window will remain visible until you close the tab. If you’re using the web client version of AVD, you might need to minimise your window to see the tab closure option.

If you want to close your session and end all programs you have running, simply shut down your DataLab session.

To shutdown your session, first click on the Start menu and then select the power symbol. An option to ‘Shut down’ will appear. Selecting this will immediately shutdown your session.